IDC estimates that $6B (out of $27B for big data) will be invested in Hadoop infrastructure in 2015. That’s not bad for an open source technology spawn within Yahoo just less than 10 years ago. The onslaught of mobile, social, and emerging prevalence of sensor data is driving businesses and governments to extract trends, sense, and information from.

After all, for a few hundred thousand dollars in investment, a corporation can build and install a compute cluster to perform analytics and predictions from the collected data. Yahoo, Facebook, Salesforce, Twitter, and internet based businesses have already invested significantly. Traditional businesses, like insurance, banks, medical providers, manufacturers, are now investing. These businesses benefit from gaining insights into their customers and supply chains, using cheap off the shelf compute servers and storage, and free open source software that’s Hadoop.

But many companies are finding that their investments in the compute infrastructure might be humming and consuming power in their data centers, but not producing enough results to justify the ROI. Hadoop, as implemented, has a number of issues:

- programming the cluster using the Java / MapReduce framework could be cumbersome in many cases

- the cluster runs as a batch processing system, harkening back to the dates of the mainframe, where jobs are submitted and the computed results would come hours later. Once a job is submitted, it’s coffee time and a waiting game.

That’s why the Spark project has zoomed from a mere Berkeley AmpLab project 4 years ago to an important top level Apache project with a 1.0 release published just in May 2014. Spark replaces many pieces of traditional Hadoop, especially the Hadoop execution engine (MapReduce). Often, customers keep the Hadoop data store HDFS.

Much of the IT world is suddenly seeing an entirely better solution than the traditional MapReduce and batch nature of Hadoop. Even the new version of Hadoop 2.x, with a new execution engine called YARN is overshadowed by the looming Spark. You see, Spark is designed to address the highlighted issues, specifically provides the following:

Deliver much higher developer velocity.

To analyze the streams of data com ing from mobile, social and sensors, developers need to write and adapt existing analytics and machine learning algorithms to process the data. Spark allows the developers to write in Scala and Python, in addition to Java, which makes the coding much faster and easier to read the more succinct code. Also, Spark provides an execution engine (DAG, RDD) that goes far beyond the relatively simplistic model of MapReduce, making programing the cluster much more intuitive to write. Execution runs with all the data residing in memory, as opposed to getting data from disks, so the turn-around is much faster. Developers can iterate quickly on their programs with fast turn-around of running Spark jobs.

ing from mobile, social and sensors, developers need to write and adapt existing analytics and machine learning algorithms to process the data. Spark allows the developers to write in Scala and Python, in addition to Java, which makes the coding much faster and easier to read the more succinct code. Also, Spark provides an execution engine (DAG, RDD) that goes far beyond the relatively simplistic model of MapReduce, making programing the cluster much more intuitive to write. Execution runs with all the data residing in memory, as opposed to getting data from disks, so the turn-around is much faster. Developers can iterate quickly on their programs with fast turn-around of running Spark jobs.

Process data with 10x-100X speedup.

Because Spark specifically arrange the data processing operations to ensure all data procession is done in memory in each compute node of the cluster. The result is tremendous speedup in processing. Customers can see more results quickly, getting higher return on their Hadoop investment.

Offer investment protection for existing Hadoop.

Spark is compatible with traditional Hadoop infrastructure. Spark can run along side Hadoop 2.0’s YARN execution engine. Spark can take data from Hive or HDFS, and in fact runs the data much faster, by putting the data in memory using an in-memory file system called Tachyon.

This last reason makes it so much easier to consider using Spark for an existing Hadoop investment. Now Hadoop developers are paying attention and flocking to meetups on Spark. While Spark just released a version 1.0, so it’s still relatively new, and subject to the iidiosyncracies of newly developed open source software. Often, the above benefits are outweighing the risks for traditional Hadoop users and developers to switch to Spark.

There are many other benefits to Spark, such as streaming – these we’ll reserved for discussions later.

On June 24, we had a thought provoking set of presentations at the

On June 24, we had a thought provoking set of presentations at the  The presentations ended with how Twitter is scaling today with the rewriting of the backend infrastructure from Ruby on Rails to a new language called

The presentations ended with how Twitter is scaling today with the rewriting of the backend infrastructure from Ruby on Rails to a new language called  With less than 1K servers, redundant data centers included,

With less than 1K servers, redundant data centers included,

customers. BTW, this system supports close to

customers. BTW, this system supports close to  each weekday, at less than 1/4 of a second response time.

each weekday, at less than 1/4 of a second response time.

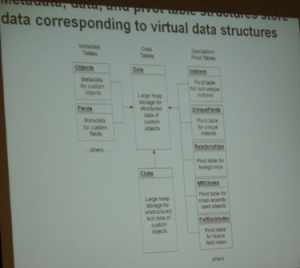

access to the data are managed by metadata. In essence, the Oracle database is transformed to a multi-tenant database.

access to the data are managed by metadata. In essence, the Oracle database is transformed to a multi-tenant database. Orion Letizi gave

Orion Letizi gave  an interesting talk on

an interesting talk on  Jeff Xiong, Co-CTO of

Jeff Xiong, Co-CTO of  The company has implemented a very scalable system that was able to get, without the hour, words of the Sichuan earthquake throughout China and Olympics results. In additional to IM, Tencent offers email, gaming, and other web services and it’s one of the fastest growing web service in the world.

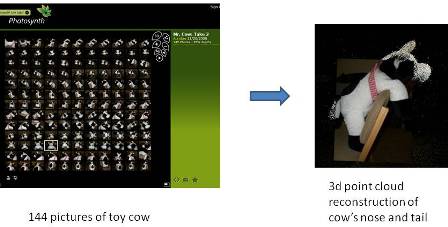

The company has implemented a very scalable system that was able to get, without the hour, words of the Sichuan earthquake throughout China and Olympics results. In additional to IM, Tencent offers email, gaming, and other web services and it’s one of the fastest growing web service in the world. Take 2 or more photographs from different perspectives of an object or a scene,

Take 2 or more photographs from different perspectives of an object or a scene,

You must be logged in to post a comment.