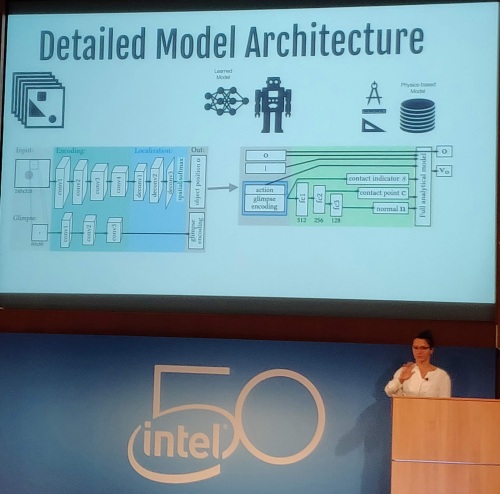

At the IEEE CIS Chapter presentation, Dr. Jeannette Bohg presented her work on using CNN to learn the parameters for the model dynamics of a multi-link robot that pushes an object on a flat table (2D). The CNN processes real time camera images to determine the object position on the table and the proximity to the robot end-effector. The robot knows where it’s end-effector is on the table through it’s sensors.

The output of the CNN is processed into parameters (robot pusher’s movement and position, friction parameters, object’s linear and angular velocities) for the model dynamics. The dynamics model uses these parameters to compute the robot motion to move the object.

The results are good. However, a pure CNN framework that processes the images and predicts the robot motion, produces event better results. However, a trained Bohg network works better than the trained CNN-only network, when the object is change. Thus, this method is more robust to changes.

Dr. Bohg’s talk also covered other topics, such scene flow estimation, all using the idea of using data (via CNN or other deep learning networks) to predict parameters for a dynamics model that account for the physics. The goal is to find a more optimal solution by reducing bias coming from the model-driven approach and reducing the variance coming from the data-driven approach.

You must be logged in to post a comment.